Yuxiang YangI'm a Research Scientist at Google Deepmind, where my work focus on the intersection of AI and Robotics. Prior to that, I completed my PhD at University of Washington, where I work with Prof. Byron Boots at the UW Robot Learning Lab. My research interest lies in the combination of machine learning and optimal control, with an application on quadrupedal robots. Prior to PhD, I obtained my undergraduate degree at UC Berkeley, and spent two years as an AI Resident in Google. |

|

Email / GitHub / Google Scholar / LinkedIn / CV

News

|

ResearchI'm generally interested in robotics, control, and learning. I would love to see the combination of them that solves complex, dynamic and real-world problems. |

|

Agile Continuous Jumping in Discontinuous TerrainsYuxiang Yang, Guanya Shi, Changyi Lin, Xiangyun Meng, Rosario Scalise, Mateo Guaman Castro, Wenhao Yu, Tingnan Zhang, Ding Zhao, Jie Tan, Byron Boots, International Conference on Robotics and Automation (ICRA) 2025 (Under Review) arxiv / video / code / website / By augmenting the hierarchical jumping framework (CAJun) with perception and performing careful system identification, we make the robot do continuous, terrain-adaptive jumping on challenging terrains like stairs and stepping stones. |

|

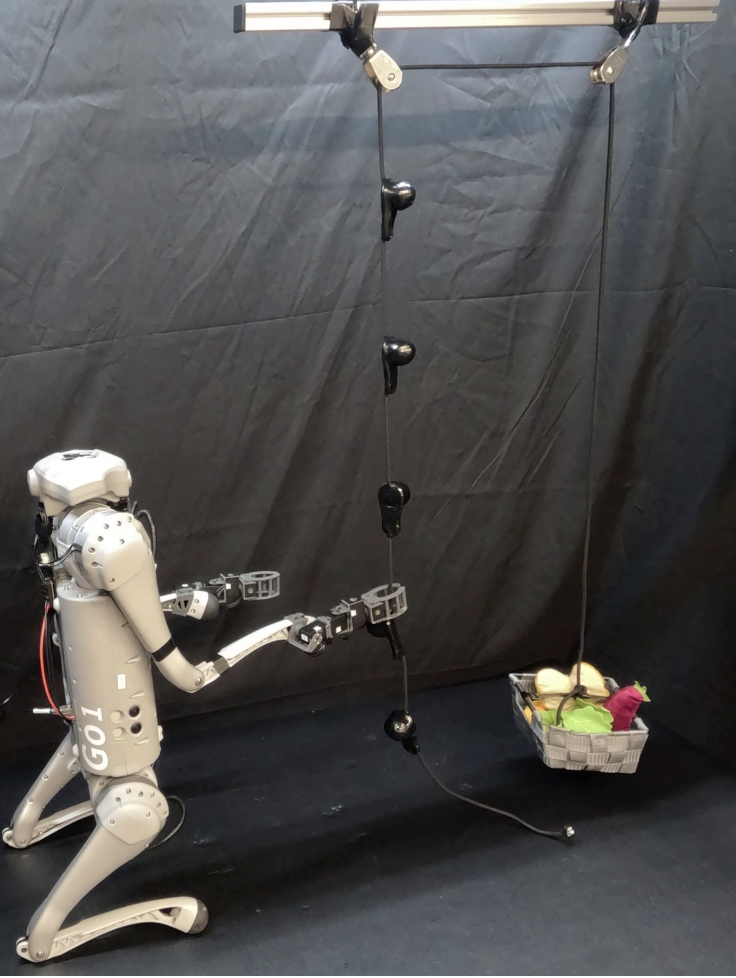

LocoMan: Advancing Versatile Quadrupedal Dexterity with Lightweight Loco-ManipulatorsChangyi Lin, Xingyu Liu, Yuxiang Yang, Yaru Niu, Wenhao Yu, Tingnan Zhang, , Jie Tan, Byron Boots, Ding Zhao International Conference on Intelligent Robots and Systems (IROS) 2024 arxiv / video / code / website / We augmented the manipulation capabilities of quadrupedal robots with custom-designed lightweight grippers, and show that the resulting system can do a wide variety of dexterous manipulation tasks. |

|

CAJun: Continuous Adaptive Jumping using a Learned Centroidal ControllerYuxiang Yang, Tingnan Zhang, Erwin Coumans, Jie Tan, Byron Boots Conference on Robot Learning (CoRL) 2023 arxiv / video / code / website / We design a GPU-accelerated, general-purpose, hierarchical framework to learn continuous, long-distance, and adaptive jumping for quadrupedal robots. |

|

Continuous Versatile Jumping using Learned Action ResidualsYuxiang Yang, Xiangyun Meng, Wenhao Yu, Tingnan Zhang, Jie Tan, Byron Boots Learning for Dynamics and Control (L4DC) 2023 arxiv / website / We enable omni-directional jumping and jump-turns by combining heuristic controllers and learned action residuals. |

|

Learning Semantics-Aware Locomotion Skills from Human DemonstrationsYuxiang Yang, Xiangyun Meng, Wenhao Yu, Tingnan Zhang, Jie Tan, Byron Boots Conference on Robot Learning (CoRL) 2022 arxiv / video / website / We build a framework for quadrupedal robots to learn offroad locomotion skills based on perceived terrain semantics. |

|

Fast and Efficient Locomotion via Learned Gait TransitionsYuxiang Yang, Tingnan Zhang, Erwin Coumans, Jie Tan, Byron Boots Conference on Robot Learning (CoRL) 2021, Best Systems Paper Award Finalist arxiv / video / code / website / We design a hierarchical framework for quadrupedal robots to learn energy-efficient gait patterns for quadrupedal robots. |

|

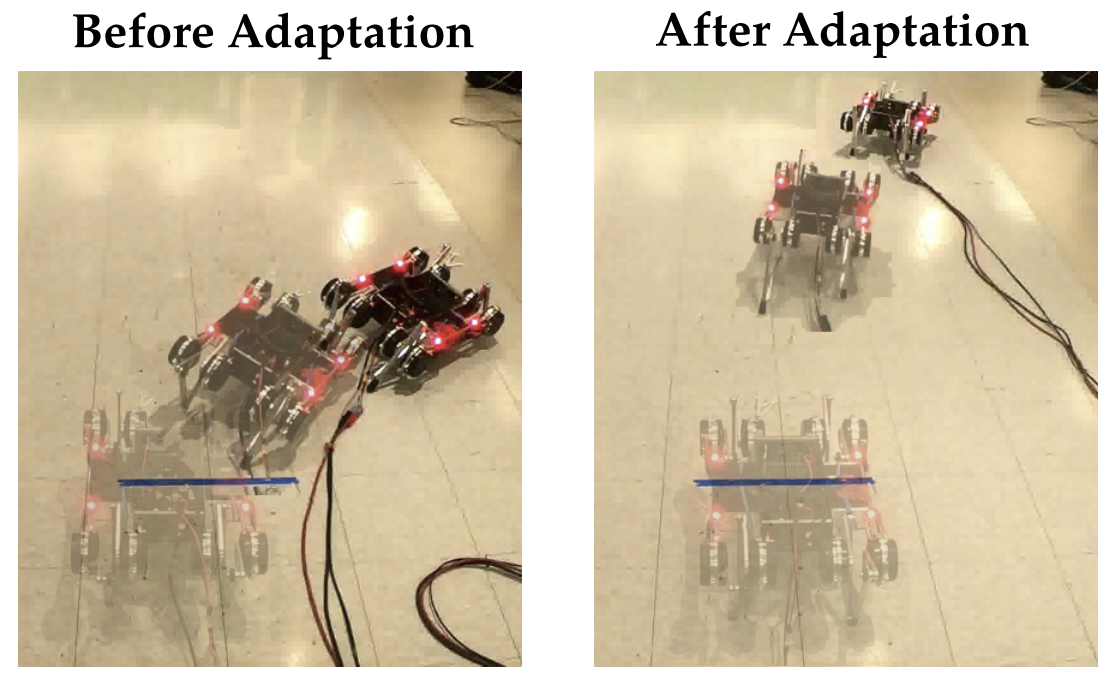

Rapidly Adaptable Legged Robots via Evolutionary Meta-LearningXingyou Song*, Yuxiang Yang*, Krzysztof Choromanski, Ken Caluwaerts, Wenbo Gao, Chelsea Finn, Jie Tan International Conference on Intelligent Robots and Systems (IROS) 2020 arxiv / video / We use evolutionary-strategy (ES) based meta learning to perform dynamics adaptation on a real legged robot. |

|

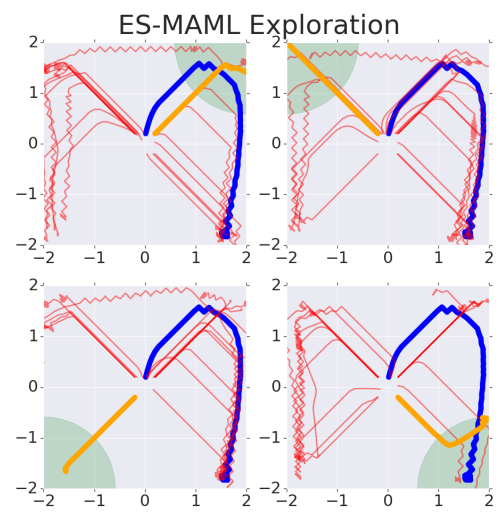

ES-MAML: Simple Hessian-Free Meta LearningXingyou Song, Wenbo Gao, Yuxiang Yang, Krzysztof Choromanski, Aldo Pacchiano, Yunhao Tang International Conference on Learning Representations (ICLR) 2020 arxiv / We introduce ES-MAML, a new framework for solving the model agnostic meta learning (MAML) problem based on Evolution Strategies (ES). |

|

Data Efficient Reinforcement Learning for Legged RobotsYuxiang Yang, Ken Caluwaerts, Atil Iscen, Tingnan Zhang, Jie Tan, Vikas Sindhwani Conference on Robot Learning (CoRL) arxiv / video / We design a model-based framework that learns to walk using less than 5 minutes of data and generalizes to unseen tasks. |

|

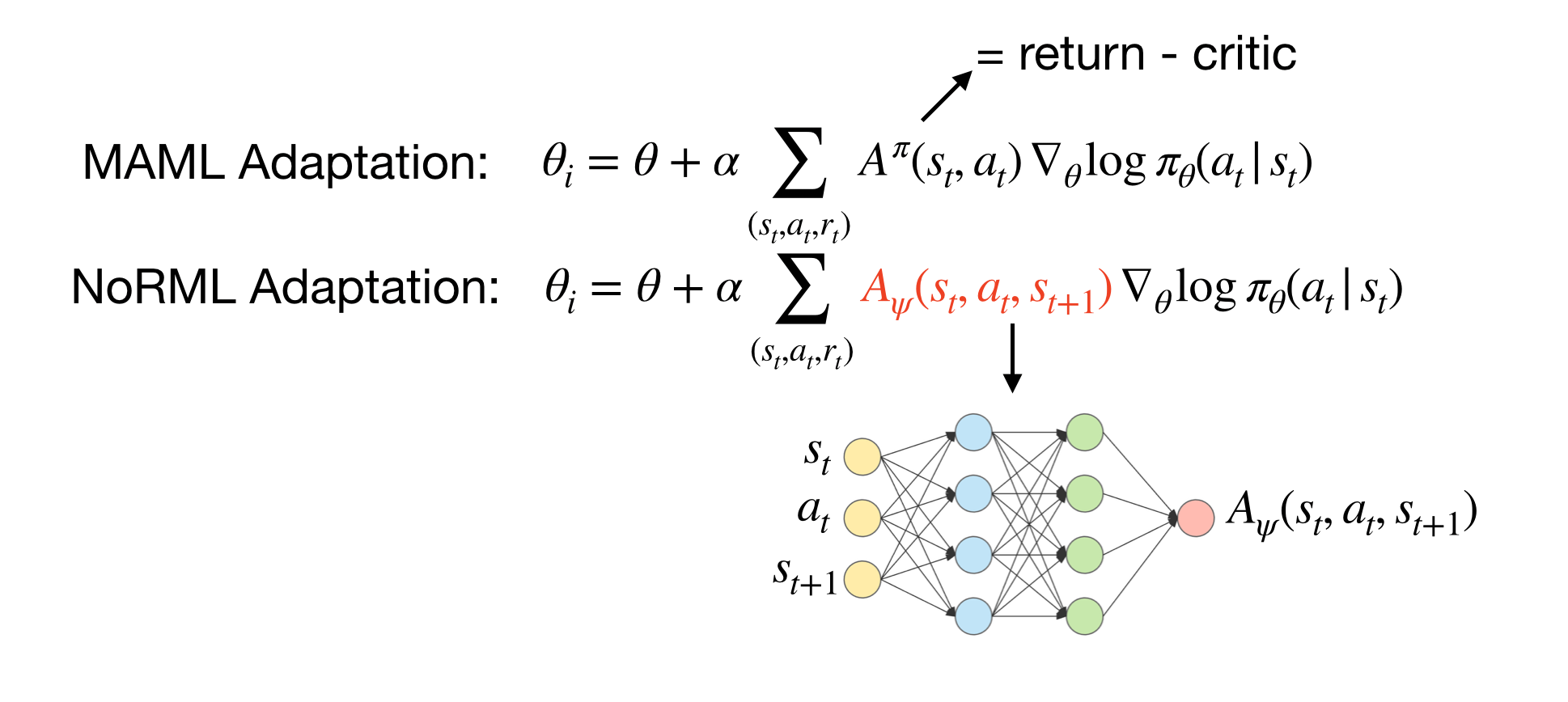

NoRML: No-Reward Meta LearningYuxiang Yang, Ken Caluwaerts, Atil Iscen, Jie Tan, Chelsea Finn International Conference on Autonomous Agents and Multiagent Systems (AAMAS) arxiv / code / website / We introduce a new algorithm for meta reinforcement learning that is more effective at adapting to dynamics changes. |

|

OpenRoACH: A Durable Open-Source Hexapedal PlatformLiyu Wang, Yuxiang Yang, Gustavo Correa, Konstantinos Karydis, Ronald S Fearing IEEE International Conference on Robotics and Automation (ICRA) arxiv / video / website / We present a open-sourced, low-cost, ROS-enabled legged robot platform for research and education. |

Projects |

Python Environment for Unitree RobotsBuilding off the motion_imitation repo, I first developed a python-based framework for the A1 robot from Unitree. The framework includes a simulation based on Pybullet, an interface for direct sim-to-real transfer, and an reimplementation of Convex MPC Controller for basic motion control. In 2021, I refactored the environment to remove unnecessary dependencies. The new environment is now available in the open-sourced repo of my CoRL 2021 paper. In 2023-2024, I migrated the entire framework to Isaac Gym for GPU-accelerated simulation and training. Check here for the non-perception version, and here for the perception-integrated version. |

|

Design and source code from Jon Barron's website |